Metadata Governance Day¶

As metadata is shared and linked, the gaps and inconsistencies in it are exposed. In the dojo you will learn how to set up a variety of features in Egeria to actively govern and maintain your metadata environment.

The dojo sessions are colour-coded like ski runs:

Beginner session

Intermediate session

Advanced session

Expert session

As you progress through the dojo, the colors of the sessions show how advanced your knowledge is becoming. The later sections are not necessarily harder to understand, but they build on knowledge from earlier sections.

The schedule also includes estimated times needed to complete each session. Even though a dojo is styled as a "day of intense focus", there is a lot of information conveyed, and you may find it more profitable to only complete one session in each sitting. Whichever way you choose to tackle the dojo, have fun and good luck - and do take breaks whenever you need to!

Metadata Governance Dojo starts here

The importance of metadata governance (25 mins)

The importance of metadata governance¶

Data, and the metadata that describes it, enables individuals and automated processes to make decisions. As the trust grows in the availability, accuracy, timeliness, usefulness and completeness of the data/metadata, its use increases and your organization sees greater value.

Building trust¶

Trust is hard to build and easy to destroy. Maintaining trust begins with authoritative sources of data/metadata that are actively managed and distributed along well known information supply chains. This flow needs to be transparent and reliable - that is, explicitly defined and verifiable through monitoring, inspection, testing and remediation. It needs to be tailored to meet the specific needs of its consumers.

Consider this story ...

A tale about trust

Sam bought a new house in a little village that was 10 miles from where he worked. He is keen to live sustainably and was delighted to hear from his new neighbours that there was a good bus service that many of them used to get to work.

Sam stopped by the bus stop to look up the bus times for the next day.

Next morning he left in plenty of time. He was surprised there was no-one there waiting for the bus. No bus came for nearly an hour, making him late for work. He asked the driver what happened to the earlier bus. He said he was not aware there was any problem.

Once at work he called the bus company. The clerk who answered the phone laughed and said that the timetable at the bus stop was out of date. The local council required them to display a timetable so the one that was there was from last year. It was ok because everyone knew the timetable.

Annoyed, Sam asked where he could get an up-to-date timetable for the bus. The clerk did not know but suggested he call head office and was nice enough to give him with the telephone number. It took a number of calls to find the right person, who then willingly dictated the times to him. Sam asked how often the bus times changed and how he could keep up-to-date. He was told that changes occurred infrequently and when they did, the bus driver would announce it. Sam could also ring back to check for changes from time to time.

Is there any problem with the way that the bus company is operating? How confident do you think Sam is that the bus company will provide a reliable service to get him to work every day? What is the impact if Sam buys a car or uses a bike because he decides the bus company is not to be trusted?

Some thoughts on the case ...

Sam is likely to conclude that the bus company does not care about its customers - and is not focused on attracting new business by encouraging people to try new routes. This suggests it is doomed in the long run. If he misses the bus again because the bus times change then he is motivated to look for alternative solutions.

His perception of the quality of the bus service is as much affected by the quality of information about the buses as the actual frequency and reliability of the service itself.

If we translate this story to the digital world, organizations often provide shared services and/or data sets that other parts of the business rely on. It is not sufficient that these services/data sets are useful with high availability/quality. If potential consumers can not find out about them, or existing consumers are disrupted by unexpected change, then the number of consumers will dwindle as they seek their own solutions, and the shared service looses relevance to the business.

The team providing the shared service should consider the documentation about it to be a key part of their deliverables. Capturing this documentation as metadata and publishing it to the open metadata ecosystem increases its findability and comprehensibility.

Meanwhile, back at the bus company ...

The managing director hears of Sam's experience and wants to understand how widespread a problem it is. She discovers that no-one is specifically responsible for keeping the timetables up-to-date at the bus stops, or ensuring up-to-date timetables are available for download/pick up. She discovered a few conscientious souls that update the bus timetable at the bus stops they personally use. However, across their network, the information supplied at the bus stops was misleading because it was out-of-date.

It is often the case that if something is not being done, either:

- no-one is responsible for it, or

- it is very low on the priorities of the person who is responsible for it, or

- the person responsible for it does not have the resources to do the work.

Therefore, step one in solving such a problem is to appoint someone who is responsible for it, and ensure they have the motivation and resources to do the work.

In metadata governance, we refer to the person responsible for ensuring that metadata about a service or dataset is complete, accurate and up-to-date as the owner. The owner may not personally maintain the metadata since this may be automated, or be handled by others. However, if there are problems with the metadata, the owner is the person responsible for sorting it out.

Fixing the bus timetables

The managing director considered the inaccurate bus timetable information as a serious problem that urgently needed fixing. She discovered that the manager responsible for scheduling the bus drivers' shifts was the one who made changes to the timetable. These changes typically occurred whenever there was a persistent difficulty in assigning a bus driver to a route. Whenever this happened, he briefed the affected driver with the change and asked that they disseminate the information to their passengers.

The bus drivers' manager was given ownership for ensuring the bus timetables were accurate at each bus stop since he was responsible for the action that triggered a need to update the timetables. He also understood the scope of any change.

The bus drivers' manager was given a new assistant. Part of the assistant's role was to make changes to the master timetables when necessary, arrange for the downloadable timetables to deliver the new version, get printed copies to the bus station and tourist information office and add an article to the local paper describing the change. They would also drive around to the affected bus stops and update the timetable as well as ensure the affected buses had new copies of the bus timetable onboard for their regular customers.

In the example above, the managing director identified where the action was occurring that should trigger the change. She assigned an owner and ensured they had the resources (ie the new assistant) to ensure the updates were done.

When a change to the timetable occurred, the bus drivers' manager triggered the assistant to update the master bus timetable. The updated timetable was then transformed into multiple formats and disseminated to all the places where their customers are likely to notice the change. Their challenge is to both create awareness that the change has happened and provide the updated information.

Metadata governance three-step process¶

We can generalise this process as follows, creating a reusable specification pattern for all forms of governance:

A three-step specification pattern of Trigger, Take Action and Make Visible.

For metadata governance, the Take Action is typically an update to metadata. For example, if a new deployment of a database occurs in the digital world, it could trigger a metadata update to capture any schema changes and then information about these changes is disseminated to the tools and consumers that need the information.

The dissemination of specific changes to metadata can also act as a trigger for other metadata updates. For example, the publishing of changes to a database schema could trigger a data profiling process against the database contents. The data profiling process adds new metadata elements to the existing metadata, and hence the knowledge graph of metadata grows.

The specification pattern above applies whether the governance is manual or automated. All that changes is the mechanism.

Triggers may be time-based, or an unsolicited update to metadata by an individual. For example, data profiling may be triggered once a week as well as whenever the schema changes. A comment attached to a database description that reports errors in the data may trigger a data correction initiative.

Consider metadata as a collection of linked facts making up a knowledge graph that describes the resources and their use by the organization. The role of the tools, people and open metadata technology is to build, maintain and consume this knowledge graph to improve the operation of the organization.

Enriching customer service

The managing director of the bus company picks up a changing perspective in the head office employees since the implementation of the bus timetable management process. Rather than a focus on running the buses, there is a growing understanding that they are providing a service to their customers.

Eager to build on this change, the managing director encourages her employees to bring forward ideas that increase their customer service. These ideas include disseminating information about local events (along with information about to get to the event by bus, of course :)).

As a result, the bus timetables began to include a calendar of local events. Event organizers were able to register their events with the bus company and negotiate for additional buses where necessary. New bus routes are identified and begin to operate. The effect is that the bus company became an active member of the community, with increasing bus use and associated profits.

When metadata governance is done well, a rich conversation develops between service providers and their consumers that can lead to both improvements in the quality of the services and the expansion in the variety and amount of consumption; the real value of the service is measured by consumption.

New uses for the services will then emerge ... growing the vitality and value to the organization.

The open metadata ecosystem (15 mins)

The open metadata ecosystem¶

The content of the data/metadata shared between teams needs to follow standards that ensure clarity, both in meaning and how it should be used and managed. Its completeness and quality need to be appropriate for the organization's uses. These uses will change over time.

The ecosystem that supplies and uses this data/metadata must evolve and adapt to the changing and growing needs of the organization because trust is required not just for today's operation but also into the future.

You can make your own choices on how to build trust in your data/metadata. Egeria provides standards, mechanisms and practices built from industry experiences and best practices that help in the maintenance of data/metadata:

-

Egeria defines a standard format for storing and distributing metadata. This includes an extendable type system so that any type of metadata that you need can be supported.

-

Egeria provides technology to manage, store, distribute this standardized metadata. This technology is inherently distributed, enabling you to work across multiple cloud platforms, data centres and other distributed environments. Collectively, a deployment of this technology is referred to as the open metadata ecosystem.

-

Egeria provides connector interfaces to allow third party technology to plug into the open metadata ecosystem. These connectors translate metadata from the third party technology's native format to the open metadata format. This allows:

- Collaboration

- Blending automation and manual processes

- Comprehensive security and privacy controls

-

Egeria's documentation provides guidance on how to use this technology to deliver business value.

In this dojo we will cover these mechanisms and practices, showing how they fit in the metadata update specification pattern described above. You can then select which are appropriate to your organization and when/where to consider using them.

Different categories of metadata (25 mins)

Categories of metadata¶

Metadata is often described as data about data. However, this definition does not fully convey the breadth and depth of information that is needed to govern your digital operations.

The categories of metadata listed below help you organize your metadata needs around specific triggers that drive your metadata ecosystem.

Technical metadata

Technical metadata¶

The most commonly collected metadata is technical metadata that describes the way something is implemented. For example, technical metadata for common digital resources includes:

- The databases and their database schema (table and column definitions) configured in a database server.

- APIs and their interface specification implemented by applications and other software services to request actions and query data.

- The events and their schemas used to send notifications between applications, services and servers to help synchronize their activity.

- The files stored on the file system.

Technical metadata is the easiest type of metadata to maintain since many technologies provide APIs/events to query the technical metadata for the digital resources it manages.

To keep your technical metadata up-to-date you need to consider the following types of metadata update triggers:

- whenever new digital resources are deployed into production,

- events that indicate that the digital resources have changed

- regular scanning of the deployed IT environment to validate that all technical metadata has been captured (and nothing rogue has been added).

Ideally you want your metadata governance to engage in the lifecycles that drive changes in the technical metadata - for example, adding management of technical metadata as part of the software development lifecycle (SDLC) or CI/CD pipelines.

Collecting and maintaining technical metadata builds an inventory of your digital resources that can be used to count each type of digital resources and act as a list to work through when regular maintenance is required. It also helps people locate specific types of digital resources.

Data content analysis results

Data content analysis results¶

The technical metadata typically describes the structure and configuration for digital resources. Analysis tools can add to this information by analysing the data content of the digital resources. The results create a characterization of the data content that helps potential consumers select the digital resources best suited for their needs.

Data content analysis is often triggered periodically, based on the update frequency that the digital resource typically experiences. It can also be triggered when the technical metadata is first catalogued or updated. If the digital resource is really important, and used in Analytics/AI you need to check more often to validate that no significant changes have occurred.

Consumer metadata

Consumer metadata¶

Consumer metadata includes the comments, reviews, tags added by the users that are consuming the metadata and the digital resources it describes. This metadata is gathered from the tools through which the users consume the metadata and the digital resources. It is then used to assess the value and popularity of the metadata and digital resources to the broader community.

Metadata update triggers should focus around the tools where the consumer feedback is captured. Typically, each piece of consumer feedback is treated as a separate trigger. The feedback should then be distributed to the tools that are used by the owning team. This could trigger changes to the resource. Ideally, the owning team should be able to respond and demonstrate they are listening and taking action.

Subject area materials

Subject area materials¶

Subject areas are topics or domains of knowledge that are important to the organization. Typically, they cover content that is widely shared across the organization and there is business value in maintaining consistency. The materials for a subject area typically includes:

- Glossary definitions describing the meaning of data values,

- Reference data describing valid values and mappings for data values,

- Technical controls defining quality rules and processing rules - such as anonymization/encryption requirements,

- Terms and conditions of use,

- Governed data classifications such as level of confidentiality, expected retention period, how critical this type of data is ...,

- Data classes are logical types for data used to characterize data during analysis.

- Standard/preferred schemas and associated implementation snippets to guide developers to improve the consistency of data representation across the digital landscape.

Assets that are managed using the subject area's materials are said to be part of the subject area's domain. This is called the Subject Area Domain and is synonymous with Data Domain - although a subject area domain may manage assets that are not data assets (such as systems and infrastructure) which is why open metadata uses a more generic name.

Updates to the subject area materials are typically made offline, collected, and then disseminated together as a new release. Therefore, the metadata update trigger is often related to the release of a collection of subject area materials.

Governance metadata

Governance metadata¶

Governance metadata describes the requirements of a particular Governance Domain and their associated controls, metrics and implementations. They are managed in releases in a similar way to subject area materials. Therefore, their releases act a triggers to further actions.

Organizational metadata

Organizational metadata¶

Organizational metadata describes the teams, people, roles, projects and communities in the organization. This metadata is used to coordinate the responsibilities and activities of the people in the organization. For example, roles can be defined for the owners of specific resources, and they can be linked to the profiles of the individuals appointed as owner. This information can be used to route requests and feedback to the right person.

Organization metadata is often managed in existing applications run by Human Resources and Corporate Security. Therefore, updates in these applications are used to trigger updates to the organizational metadata in the open metadata ecosystem.

Governance actions can trigger the creation of new roles and appointments to these roles. These elements can be then be disseminated to the appropriate applications for information, verification and/or approval.

Business context metadata

Business context metadata¶

An organization has capabilities, facilities and services. The digital resources it uses, serve these purposes. When decisions need to be made as to which digital resources to invest in, it is helpful to understand which part of the business will be impacted.

Information about the organization's capabilities, facilities and services is called business context. Often individuals core role is focuses on these aspects. Linking them to the digital resources that they depend on (often invisible to these individuals) helps to raise awareness of the mutual dependency and understanding of the impact/value of change at either level.

Triggers that detect change in digital resources (for example an outage) can result in information flowing to the appropriate business teams. If the change (or outage) is extensive, the linked business context can be used to prioritize the associated work.

Process metadata

Process metadata¶

Data is copied, combined and transformed by applications, services, processes and activities running in the digital landscape. Capturing the structure of this processing shows which components are accessing and changing the data. This Process Metadata is a key element in providing lineage, used for traceability, impact analysis and data observability.

The capture and maintenance of process metadata is typically triggered as process implementations are deployed into production.

Operational metadata

Operational metadata¶

Operational metadata describes the activity running in the digital landscape. For example, process metadata could describe the steps in an ETL job that copies data from one database to another. The operational metadata captures how often it runs, how many rows it processed and the errors it found.

Operational metadata is often captured in log files. As they are created, they trigger the cataloging and linking of their information into other types of metadata.

Metadata relationships and classifications¶

The other types of trigger to consider is when/where the metadata elements described above can be connected together and augmented.

This linking and augmentation of metadata has a multiplying effect on the value of your metadata.

You can think of the metadata described above as the facts about your organization's resources and operation. Metadata relationships that show how one element relates to another, begin to show the context in which decisions are made and these resources are used.

Metadata classifications are used to label metadata as having particular characteristics. This helps group together similar elements, or elements that represent resources that need similar processing

Summary

Hopefully the discussion above has helped to illustrate that metadata is varied and can be built into a rich knowledge base that drives organizational objectives through increased visibility, utilization and management of an organization's digital assets.

Open metadata types (25 mins)

The open metadata type system¶

Knowledge about data is spread amongst many people and systems. One of the roles of a metadata repository is to provide a place where this knowledge can be collected and correlated, as automated as possible. To enable different tools and processes to populate the metadata repository we need agreement on what data should be stored and in what format (structures).

Open metadata subject areas¶

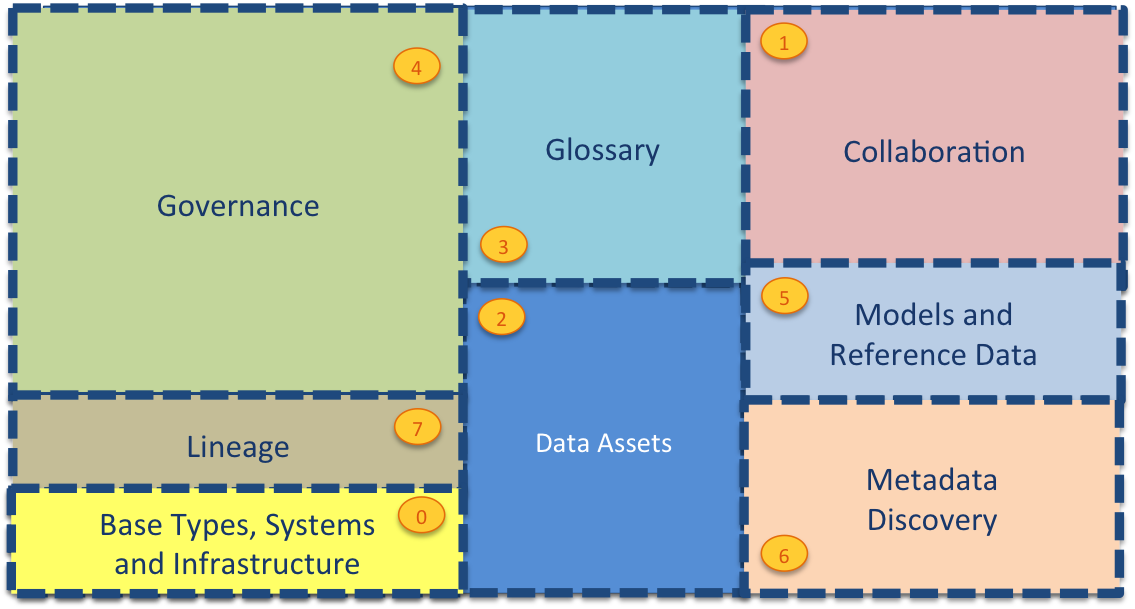

The different subject areas of metadata that we need to support for a wide range of metadata management and governance tasks include:

This metadata may be spread across different metadata repositories that each specialize in particular use cases or communities of users.

| Area | Description |

|---|---|

| Area 0 | describes base types and infrastructure. This includes the root type for all open metadata entities called OpenMetadataRoot and types for Asset, DataSet, Infrastructure, Process, Referenceable, SoftwareServer and Host. |

| Area 1 | collects information from people using the data assets. It includes their use of the assets and their feedback. It also manages crowd-sourced enhancements to the metadata from other areas before it is approved and incorporated into the governance program. |

| Area 2 | describes the data assets. These are the data sources, APIs, analytics models, transformation functions and rule implementations that store and manage data. The definitions in Area 2 include connectivity information that is used by the open connector framework (and other tools) to get access to the data assets. |

| Area 3 | describes the glossary. This is the definitions of terms and concepts and how they relate to one another. Linking the concepts/terms defined in the glossary to the data assets in Area 2 defines the meaning of the data that is managed by the data assets. This is a key relationship that helps people locate and understand the data assets they are working with. |

| Area 4 | defines how the data assets should be governed. This is where the classifications, policies and rules are defined. |

| Area 5 | is where standards are established. This includes data models, schema fragments and reference data that are used to assist developers and architects in using best practice data structures and valid values as they develop new capabilities around the data assets. |

| Area 6 | provides the additional information that automated metadata discovery engines have discovered about the data assets. This includes profile information, quality scores and suggested classifications. |

| Area 7 | provides the structures for recording lineage and providing traceability to the business. |

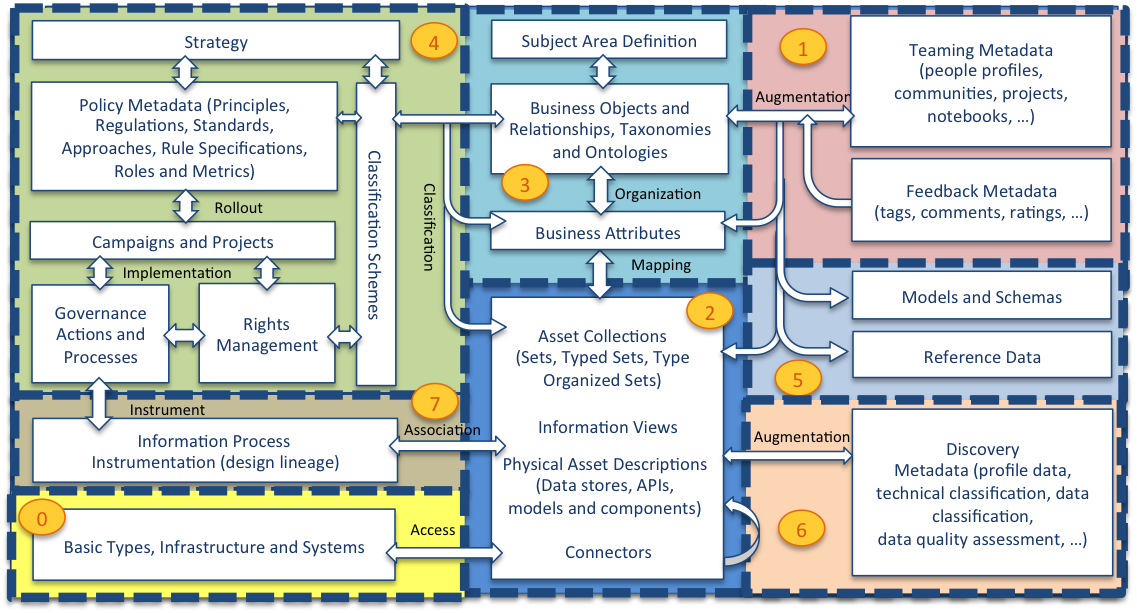

The following diagram provides more detail of the metadata structures in each area and how they link together:

Metadata is highly interconnected

Bottom left is Area 0 - the foundation of the open metadata types along with the IT infrastructure that digital systems run on such as platforms, servers and network connections. Sitting on the foundation are the assets. The base definition for Asset is in Area 0 but Area 2 (middle bottom) builds out common types of assets that an organization uses. These assets are hosted and linked to the infrastructure described in Area 0. For example, a data set could be linked to the file system description to show where it is stored.

Area 5 (right middle) focuses on defining the structure of data and the standard sets of values (called reference data). The structure of data is described in schemas and these are linked to the assets that use them.

Many assets have technical names. Area 3 (top middle) captures business and real world terminologies and organizes them into glossaries. The individual terms described can be linked to the technical names and labels given to the assets and the data fields described in their schemas.

Area 6 (bottom right) captures additional metadata captured through automated analysis of data. These analysis results are linked to the assets that hold the data so that data professionals can evaluate the suitability of the data for different purposes. Area 7 (left middle) captures the lineage of assets from a business and technical perspective. Above that in Area 4 are the definitions that control the governance of all of the assets. Finally, Area 1 (top right) captures information about users (people, automated process) their organization, such as teams and projects, and feedback.

Within each area, the definitions are broken down into numbered packages to help identify groups of related elements. The numbering system relates to the area that the elements belong to. For example, area 1 has models 0100-0199, area 2 has models 0200-299, etc. Each area's sub-models are dispersed along its range, ensuring there is space to insert additional models in the future.

Test yourself ...

Fill in the following table to map the areas of the open metadata type system to the different categories of metadata.

| Open Metadata Area | Categories of metadata covered by this Area - choose from Technical metadata, Data content analysis results, Consumer metadata, Subject area materials, Governance metadata, Organizational metadata, Business context metadata, Process metadata and Operational metadata. |

|---|---|

| 0 - Base Types, Systems and Infrastructure | |

| 1 - Collaboration | |

| 2 - Data Assets | |

| 3 - Glossary | |

| 4 - Governance | |

| 5 - Models and Reference Data | |

| 6 - Metadata Discovery | |

| 7 - Lineage |

Answer

| Open Metadata Area | Categories of metadata covered by this Area |

|---|---|

| 0 - Base Types, Systems and Infrastructure | Technical Metadata |

| 1 - Collaboration | Consumer Metadata, Organizational Metadata |

| 2 - Data Assets | Technical Metadata |

| 3 - Glossary | Subject Area Materials |

| 4 - Governance | Subject Area Materials, Governance Metadata, Operational Metadata (associated assets) |

| 5 - Models and Reference Data | Technical Metadata, Subject Area Materials |

| 6 - Metadata Discovery | Data Content Analysis Results |

| 7 - Lineage | Business Context, Process Metadata |

Designing your metadata supply chains (45 mins)

Metadata supply chains¶

The open metadata ecosystem collects, links and disseminates metadata from many sources. However, it is designed in an iterative, agile manner, adding new use cases and capabilities over time.

Each stage of development considers a particular source of metadata and where it needs to be distributed to. Consider this scenario...

Database schema capture and distribution

There is a database server (Database Server 1) that is used to store application data that is of interest to other teams. An initiative is started to automatically capture the schemas of the databases on this database server. This schema information will be replicated to two destinations:

- Another database server (Database Server 2) is used by a data science team as a source of data for their work. An ETL job runs every day to refresh the data in this second database with data from the first database. The data is anonymized by the ETL job, but the schema and data profile remains consistent. If the schema in the first database changes, the ETL job is updated at the same time. However, the schema in the second database is not updated because the team making the change do not have access to it. Nevertheless it must be updated consistently before the ETL job runs; otherwise it will fail.

- The analytics tool that is also used by the data science team has a catalog of data sources to show the data science team what data is available. This needs to be kept consistent with the structure of the databases. The tool does provide a feature to refresh any data source schema in its catalog, but the team are often unaware of changes to their data sources, or simply forget to do it, and only discover the inconsistency when their models fail to run properly.

The integration of these third party technologies with the open metadata ecosystem can be thought of as having four parts to it.

- Any changes to the database schema are extracted from Database Server 1 and published to the open metadata ecosystem.

- The new schema information from Database Server 1 is detected in the open metadata ecosystem and deployed to Database Server 2.

- The changes to Database Server 2's schema are detected and published to the open metadata ecosystem.

- The new schema information from Database Server 2 is detected in the open metadata ecosystem and distributed to the Analytics Workbench.

Four integration steps to capture and distribute the database schema metadata from Database Server 1.

Here is another view of the process, but shown as a flow from left to right.

At each stage, there is a trigger (typically detecting something has changed), metadata is assembled, updated and when it is read, made visible through the open metadata ecosystem.

A three-step specification pattern of Trigger, Maintain Metadata and Make Visible.

The implementation of the three-step pattern for each part of the integration is located in an integration connector. Integration connectors are configurable components that are designed to work with a specific third party technology. There would be 4 configured integration connectors to support the scenario above. However, the implementation of the integration connectors for parts 1 and 3 would be the same implementation, just 2 instances, each configured to work with a different database server.

The integration connectors supplied with Egeria are described in the connector catalog. It is also possible to write your own integration connectors if the ones supplied by Egeria do not meet your needs.

Integration connectors run in the Integration Daemon. It is possible to have all 4 integration connectors running in the same integration daemon. Alternatively, they each may run in a different integration daemon - or any combination in between. The choice is determined by the organization of the teams that will operate the service. For example, if this metadata synchronization process was run by a centralized team, then all 4 integration connectors would probably run in the same integration daemon. If the work is decentralized, the integration connector for part 1 may be in an integration daemon operated by the same team that operates Database Server 1. The other integration connectors may run together in an integration daemon operated by the team that operates Database Server 2 and the Analytics Workbench.

The diagram below shows the decentralized option.

This type of deployment choice keeps control of the metadata integration with the teams that own the third party technology, and so upgrades, back-ups and outages can be coordinated.

The implementation of the open metadata ecosystem that connects the integration daemons can also be centralized or decentralized. This next diagram shows two integration daemons connecting into a centralized metadata access store that provides the open metadata repository.

Alternatively, each team could have their own metadata access store, giving them complete control over their metadata. The two metadata access stores are connected via an Open Metadata Repository Cohort (or just "cohort" for short.) The cohort enables the two metadata access stores to operate as one logical metadata store.

The behaviour of the integration daemons is unaffected by the deployment choice made for the metadata access stores.

Adding lineage¶

In the scenario above, data from Database Server 1 is extracted, anonymized and stored in Database Server 2 by an ETL job running in an ETL engine.

The data scientist team want to know the source of each of the databases they are working with. The metadata that describes the source of data is called lineage. Ideally it is captured by the ETL engine to ensure it is accurate.

ETL engines have a long history of capturing lineage, since it is a common requirement in regulated industries. The diagram below shows three choices on how an ETL engine may handle its lineage metadata.

- In the first box on the left, the ETL engine has its own metadata repository and so it is integrated into the open metadata ecosystems via the integration daemon (in the same way as the database and analytics workbench).

- In the middle box, the ETL engine is producing lineage events that follow the OpenLineage Standard. The integration daemon has native support for this standard and so the ETL Engine can send these events directly to the integration daemon which will pass them to any integration connector that is configured to receive them.

- The final box on the right-hand side shows an ETL engine that is part of a suite of tools that share a metadata repository. These types of third party metadata repositories often have a wide variety of metadata that is good for many use cases. So, although it is possible to integrate them through the integration connectors running in the integration daemon, it is also possible to connect them directly into the cohort via a Repository Proxy. This is a more complex integration to perform. However, it has the benefit that the metadata stored in the third party metadata repository is logically part of the open metadata ecosystem and available through any of the open metadata and governance APIs without needing to copy its metadata into a metadata access store.

Summary

In this guide you have seen that integration with the open metadata ecosystem is built up iteratively using integration connectors running in an integration daemon. Open metadata is stored in metadata access stores and shared across the open metadata ecosystem using a cohort. It is also possible to plug in a third party metadata repository using a repository proxy.

Automating metadata capture (30 mins)

Automating metadata capture¶

People are not good at repetitive admin tasks, particularly if these tasks are not the primary focus of their work. Therefore, the open metadata ecosystem is more complete and accurate when automation supports these mundane tasks. Following our three step model, the automation needs to:

- monitor for triggers that indicate that metadata needs to be updated,

- drive the necessary changes to metadata and then

- make those changes visible to downstream processing.

A three-step specification pattern of Trigger, Maintain Metadata and Make Visible.

Introducing the integration daemon

Inside the Integration Daemon¶

Recap: The Integration Daemon is an Egeria OMAG Server that sits at the edge of the open metadata ecosystem, synchronizing metadata with third party tools. It is connected to a Metadata Access Server that provides the APIs and events to interact with the open metadata ecosystem.

The integration can be:

-

Triggered by an event from a third party technology that indicates that metadata needs to be updated in the open metadata ecosystem to make it consistent with the third party technology's configuration.

-

Triggered at regular intervals so that the consistency of the open metadata ecosystem with the third party technology can be verified and, where necessary, corrected.

-

Triggered by a change in the open metadata ecosystem indicating that changes need to be replicated to the third party technology.

Running in the integration daemon are integration connectors that each support the API of a specific third party technology. The integration daemon starts and stops the integration connectors and provides them with access to the open metadata ecosystem APIs. Its action is controlled by configuration, so you can set it up to exchange metadata with a wide range of third party technologies.

An integration connector is specialized for a particular technology. The integration daemon provides specialized services focused on different types of technology, in order to simplify the work of the integration connector. These specialized services are called the Open Metadata Integration Services (OMISs). Each integration connector is paired with an OMIS and, the OMIS is paired with a relevant Open Metadata Access Service (OMAS) running in a Metadata Access Server.

Further information

Subject Areas (1.5 hours)

Subject Areas¶

Back at the bus company ...

The new focus on ensuring bus timetable information is accurate and available has created an increase in passengers for the bus company. The assistant who is responsible for managing and disseminating the bus timetable information is not happy, however. What should be a simple task is tedious and complicated because each form of the timetable (at the bus stop, internet download, printed timetables) are each using a different format. For example, the master timetable and the timetables for the bus stop are in 24-hour clock, whereas the internet downloadable timetable and the printed timetable use am/pm. This means the assistant has to translate the bus times from one format to another in order to publish a new set of timetables. Similarly, the names of the bus stops are not consistent across the different formats. The assistant realizes that some form of standardization is needed so that the different formats can be created automatically from the master timetables. Otherwise, they are going to look for a new job!

Using standard formats, names and meanings for resources, such as data, is critical to ensure they can be shared and reused for multiple purposes. Open metadata provides the means to describe these standards and used them to create consistency across all copies, formats and uses. The effort required to author and maintain a these standards, plus the governance processes required to ensure they are used wherever appropriate, is offset by the savings in managing and using the resources associated with the subject area.

Recap:

- Subject Areas define the standards for your resources and their use. They cover resources that are widely shared across the organization and there is business value in maintaining consistency.

- Each subject area has an owner who is responsible for coordinating the development and maintenance of the subject area's materials.

- Digital Resources that are managed using the subject area's materials are said to be part of the subject area's "domain". This is called the Subject Area Domain and is synonymous with Data Domain - although since a subject area domain may manage resources that are not just data assets (such as systems and infrastructure), open metadata uses a more generic name.

The glossary

The glossary is at the heart of the materials for a subject area. Figure 1 shows that the glossary contains glossary terms. Each glossary term describes a concept used by the business. It is also possible to link two glossary terms together with a relationship. The relationship may describe a semantic relationship or a structural one.

Figure 1: Glossaries for describing concepts and the relationships between them

Semantic relationships include:

- RelatedTerm is a relationship used to say that the linked glossary term may also be of interest. It is like a "see also" link in a dictionary.

- Synonym is a relationship between glossary terms that have the same, or a very similar meaning.

- Antonym is a relationship between glossary terms that have the opposite (or near opposite) meaning.

- PreferredTerm is a relationship that indicates that one term should be used in place of the other term linked by the relationship.

- ReplacementTerm is a relationship that indicates that one term must be used instead of the other. This is stronger version of the PreferredTerm.

- Translation is a relationship that defines that the linked terms represent the same meaning but each are written in a different language. Hence, one is a translation of the other. The language of each term is defined in the Glossary that owns the term.

- IsA is a relationship that defines that the one term is a more generic term than the other term. For example, this relationship would be used to say that "Cat" IsA "Animal".

Structural relationships in the glossary are relationships that show how terms are typically used together.

- UsedInContext links a term to another term that describes a context. This helps to distinguish between terms that have the same name but different meanings depending on the context.

- HasA is a term relationship between a term representing a SpineObject (see glossary term classifications below) and a term representing a SpineAttribute.

- IsATypeOf is a term relationship between two SpineObjects saying that one is the subtype (specialisation) of the other.

- TypedBy is a term relationship between a SpineAttribute and a SpineObject to say that the SpineAttribute is implemented using a type represented by the SpineObject

Further information

- See Area 3 in the Open Metadata Types to understand how these concepts are represented on open metadata.

Data classes

A data class provides the specification of a data type that is important to the subject area. Date, Social Security Number and Credit Card Number are examples of data classes.

The data class specification defines how to identify data fields of its type by inspecting the data values stored in them. The specification is independent of a particular technology, which is why they are often described as logical data types. The specification may include preferred implementation types for different technologies using Implementation Snippets.

Data classes are used during metadata discovery (see below) to identify the types of data in the discovered data fields. This is an important step in understanding the meaning and business value of the data fields. They can also be used in quality rules to validate that data values match the perscribed data class.

Data classes can be linked together in part-of and is-a hierarchies to create a logical type system for a subject area. A glossary term can be linked to a data class via an ImplementedBy relationship to identify the preferred data class to use when implementing a data field with meaning described in the glossary term. A data class can be linked to glossary term that describes the meaning of the data class via a SemanticAssignment relationship.

Figure 2: Data classes for describing the logical data types and implementation options

Further information

- See Model 0540 in the Open Metadata Types to understand how data classes are represented on open metadata.

- See Model 0737 in the Open Metadata Types to understand the ImplementedBy relationship.

- See Model 0370 in the Open Metadata Types to understand the SemanticAssignment relationship.

- See Model 0504 in the Open Metadata Types to understand ImplementationSnippets.

Consuming the glossary in design models

Design models (such as Concept models, E-R Models, UML models) and ontologies capture similar concepts to those described in the glossary. It helps if their definitions are consistent. When a new glossary is being built, existing models and ontologies can be used to seed the glossary. The models/ontologies themselves can be loaded in open metadata and the model elements linked to their corresponding glossary terms. Then new versions of the data models/ontologies can be generated from open metadata.

Figure 3: Linking to models

Any linked data classes provide details of language types to use when generating compliant artifacts from the models.

Further information

- See Model 0571 in the Open Metadata Types to understand how concept models are represented on open metadata.

- See Model 0565 in the Open Metadata Types to understand how design models are represented on open metadata.

Schemas

Schemas document the structure of data, whether it is stored or moving through APIs, events and data feeds. A schema is made up of a linked subgraph of schema elements. A schema begins with a schema element called a schema type. This may be a single primitive field, a set of values, an array of values, a map between two sets of values or a nested structure. The nested structure is the most common. In this case the schema type has a list of schema attributes (another type of schema element) that describe the fields in the structure. Each of these schema attributes has its own schema type located in its TypeEmbeddedAttribute classification.

Figure 4 shows a simple schema structure.

Figure 4: Schemas for documenting the structure of data

Further information

- See Schemas to understand how different types of schema are represented.

- See Model 0501 in the Open Metadata Types to see the formal definition of the different types of schema elements.

- See Model 0505 in the Open Metadata Types to understand schema attributes and the TypeEmbeddedAttribute classification.

Schemas and assets

An asset describes a valuable digital resource. Such resources include databases, data files, documents, APIs, data feeds, and applications. A digital resource can be dependent on other digital resource to fulfill their implementation. This relationship is also captured in open metadata with relationships such as DataSetContent. These relationships help to highlight inconsistencies in the assets' linkage to the subject area's materials, which may be due to errors in either the metadata or the implementation/deployment/use of the associated digital resources.

Figure 5: Dependencies between digital resources are reflected in open metadata by relationships between assets

Since schema types describe the structure of data, they can be attached to assets using the Schema relationship to indicate that this asset's data is organized as described by the schema. Schemas are important because they show how individual data values are organized. Governance is often concerned with the meaning, correctness and use of individual data values since they are used to influence the decisions made within the organization. Therefore, even though the content of a schema bulks up the size and complexity of the metadata, it is necessary to capture this detail.

Figure 6: Schemas describe the structure of the data store in a digital resource (described by the asset in the catalog)

A schema is typically attached to only one asset since it is classified and linked to other elements assuming that the asset/schema combinations describes the particular collection of data stored in the associated digital resource. However, there is still a role for the subject area materials to provide preferred schema structures for software developers, data engineers and data scientists to use when they create implementations of new digital resources.

When a new asset is created, the schema definition in the subject area can be used as a template to define the schema for the asset (see figure 7). Then:

- The digital resource can be generated from the asset/schema, or

- Metadata discovery (see below) can be used to validate that the schema defined in the digital resource matches the schema associated with the asset.

Figure 7: Using a schema from a subject are as a template for a new asset

There is also an opportunity to share schemas between assets using an ExternalSchemaType. This option has the advantage that there only one copy of the schema. However, it is only used when all classifications and relationships attached to the shared part of the schema apply to all data in the associated digital resources.

Figure 8: Using an external schema type to share a common schema

Further information

- See Model 0503 in the Open Metadata Types to understand the Schema relationship.

- See Model 0501 in the Open Metadata Types to understand how schemas are represented on open metadata.

- See Model 0505 in the Open Metadata Types to understand how schema attributes are represented on open metadata.

Reference value assignments

The materials for a subject area may include sets of values used to label metadata elements to show that they are in a particular state or have a specific characteristic that is important in the subject area. For example, a subject area about people may include the notion of an Adult and a Child (or Minor). The age of majority is different in each country and so a simple label assigned to a Person profile that indicates that a person is an adult would allow the knowledge of how to determine if someone is an adult to be contained around the maintenance of the person profiles, while the reference data value is used in multiple places.

These labels are called reference data values and are managed in Valid Value Sets. The association between a reference data value and a metadata element is ReferenceValueAssignment.

Figure 9: Labelling using reference data values

Further information

- Reference Data Management describes different uses of valid value sets.

Schema assignments

Figure 10 show three types of assignments between the metadata associated with a digital resource (technical metadata) and the subject area materials:

- SemanticAssignment - Semantic assignments indicate that the data stored in the associated data field has the meaning described in the glossary term.

- ValidValuesAssignment - Valid value sets define a list of valid values. They can be used to the values that are allowed to be stored in a particular data field if it can be described as a discrete set.

- DataClassAssignment - A data class assignment means that the data in the data field conforms to the type described in the data class.

When these relationships are used in combination, there should be consistency between the assignments to the data field and those to the associated glossary term.

Figure 10: Using assignment relationships to create a rich description of the data stored in a schema attribute (data field)

Governed data classifications

Governed data classifications can be attached to most types of metadata elements. They can also be assigned to glossary terms to indicate that the classification applies to all data values associated with the glossary term. The governed data classifications have attributes that identify a particular level that applies to the attached element. The definition for each level can be linked to appropriate Governance Definitions that define how digital resources classified at that level should be governed. Governance Classification Levels are linked to Governance Definitions using the GovernedBy relationship.

Figure 11: Classifying glossary terms to identify the governance definitions that apply to all data values associated with the glossary term

Further information

- Setting up your Governance Program describes how different types of governance metadata are used.

Connectors and connections

The digital resources associated with the assets in the catalog are accessed through connectors. A Connector is a client library that applications use to access the data/function held by the digital resource. Typically, there is a specialized connector for each type of Asset/technology.

Sometimes there are multiple connectors to access a specific type of asset, each offering a different interface for the application to use.

Instances of connectors are created using the Connector Broker. The connector broker creates the connector instance using the information stored in a Connection. These can be created by the application or retrieved from the open metadata stores.

A connection is stored in the open metadata stores and linked to the appropriate asset for the digital resource.

Figure 12: Connection information needed to access the data held by an asset

Further information

- See the connector catalog to understand how connectors are used in Egeria.

- See Model 0201 in the Open Metadata Types to understand how connections are represented.

Metadata discovery

An open discovery service is a process that runs a pipeline of analytics to describe the data content of a resource. It uses statistical analysis, reference data and other techniques to determine the data class and range of values stored, potentially what the data means and its level of quality. The result of the analysis is stored in metadata objects called annotations.

Part of the discovery process is called Schema Extraction. This is where the discovery service inspects the schema in the digital resource and builds a matching structure of [DataField]/types/6/0615-Schema-Extraction/) elements in open metadata. As it goes on to analyse the content of a particular data field in the resource, it can add its results to an annotation that is attached to the DataField element. It can also maintain a link between the DataField element and its corresponding SchemaAttribute element if the schema has already been attached. Through ths process it is possible to detect any anomalies between the documented schema and what is actually implemented.

Part of the analysis of a single data field may be to identify its data class (or a list of possible data classes if the analysis is not conclusive). THe data class in turn may identify a list of possible glossary terms that could apply to the data field.

For example, there may be a data class called address. A discovery service may detect that an address is stored in a digital resource. The data class may be linked to glossary terms for Home Address, Work Location, Delivery Address, ... The discovery service may not be able to determine which glossary term is appropriate in order to establish the SemanticAssignment relationship, but providing a steward with a short list is a considerable saving.

Figure 13: Output from a metadata discovery service

Further information

- See Discovery and Stewardship to understand how metadata discovery works.

- See Area 6 in the Open Metadata Types to understand how discovery metadata is represented.

Bringing it all together

Figure 13 summarizes how the subject area materials create a rich picture around the resources used by your organization. As they link to the technical metadata, they complement and reinforce the understanding of your data. In a real-world deployment, the aim is to automate as much of this linkage as possible. This is made considerably easier if the implementation landscape is reasonable consistent. However, where the stored data values do not match the expected types defined in the schema, the metadata model reveals the inconsistencies and often requires human intervention to ensure the links are correct.

Figure 14: Linking the metadata together

Defining subject areas in Egeria

- Governance Program OMAS provided support for the definition of subject areas and the ability to retrieve details of the materials that are part of the subject area.

- Digital Architecture OMAS supports the definition of valid value sets and quality rules for the subject area.

- Asset Manager OMAS supports the management of glossaries and the exchange of subject area materials with other catalogs and quality tools.

-

The Defining Subject Areas scenario for Coco Pharmaceuticals walks through the process of setting up. There are two code samples associated with this set of subject areas:

- Setting up the subject area definitions

- Setting up glossary categories for each subject area ready for subject area owners to start defining glossary terms associated with their subject area.

Coco Pharmaceuticals Scenarios

There are descriptions of creating glossaries and other materials for subject areas in the Coco Pharmaceuticals Scenarios.

Using automated governance actions (3.5 hours)

Using automated governance actions¶

The open metadata ecosystem collects, links and disseminates metadata from many sources. Inevitably there will be inconsistencies and errors in the metadata and there need to be mechanism that help identify errors and control how they are corrected. This section focuses on the automated processes that validate, correct and enrich the metadata in the open metadata ecosystem.

The shearing layers of governance actions (1 hour)

The building industry has the principle of shearing layers in the design of a building. This principle is as follows:

... Things that need to change frequently should be easy to change. Those aspects that change infrequently can take more effort and time.

In Egeria, the shearing layer principle is evident in the design of automated governance. An organization that is maturing their governance capability needs to be able to move fast. These automations need to be quick to create and quick to change. There is no time to wait for a software developer to code each one.

Egeria defines flexible components called governance services that can be re-configured and reused in many situations. Collectively the governance services form a pallet of configurable governance functions. The governance team link them together into a new process every time they need a new governance automation.

The advantage of this approach is the ability to rapidly scale out your governance capability. The downside is that there are more moving parts and concepts to understand.

The diagram below summarizes how Egeria's governance automation works. Descriptions of each layer follows the diagram.

At the base are the governance service components

Governance services are coded in java and packaged in Java Archives (Jar files). They need to be passed information about the function to perform and the metadata elements on which to operate since this will different each time they are called.

Part of the implementation is a connector provider that is able to return a description of its governance service in the form of a connector type. The connector type provides information about how to configure and run the governance service. This includes:

- A description of the connector's function

- Names of configuration properties that can modify the behaviour of the governance service.

- A list of request types that select which function it is to perform.

-

Names of request parameters that can be supplied (typically by the caller) that can override the configuration properties and/or provide the identifier(s) of any metadata element(s) to work on.

-

Names of supported action targets that provide links to the metadata element(s) to work on. The action target mechanism is typically used when governance services are being called in a sequence from a governance action process. The action targets are used to pass details of the metadata elements to work on from service to service.

The governance service definitions

The JAR file is added to the CLASSPATH of Egeria's platform where is can be loaded and inspected. The architect extracts the connector type of the governance service implementation and creates at least one governance service definition for it. The governance service definition is metadata that includes a GovernanceService entity, a Connection entity and a ConnectorType entity (based on the connector type extracted from the implementation) linked together. The connection entity will include the settings for the various configuration properties described in the connector type. If different combinations of configuration properties are desired, they are configured in different governance service definitions.

The governance engine definitions

The architect then builds a governance engine definition. This is metadata that defines a list of governance request types. These are the names of the functions needed by the governance team.

Each governance request type is mapped to a governance service definition (defined above). The governance engine definition can include a mapping from the governance request type to a request type understood by the governance service implementation (called the serviceRequestType). Without this mapping, the governance request type is passed directly to the governance service implementation when it is called.

Typically, the governance engine definition is packaged in a open metadata archive called a governance engine pack. This can be loaded into any platform that is going to run the governance engine/services.

Engine actions

The governance engine is configured in an Engine Host server running on the platform. The governance engine can be called by creating an engine action. This is a metadata entity that describes the governance request type and request parameters to run on a specific governance engine. The engine action content is broadcast to all the running engine hosts via the Governance engine OMAS Out Topic. On receiving this event, each engine host consults their active governance engines to see if the governance request type is supported. The first engine host to detect the new engine action will claim the engine action, which changes it status from WAITING to ACTIVATING in the open metadata ecosystem. The successful Engine Host then passes the request to its governance engine to execute and the engine action's status moves to IN_PROGRESS. The results of the execution are also stored in the engine action including the final status (ACTIONED, INVALID or FAILED).

Governance services produce one or more guards when they complete. Guards describe the outcome of running the governance service. They are stored in the governance action entity that kicked off the governance service. The governance action entities provide an audit trail of the automated governance actions that were requested, and their outcome.

Governance action processes

Governance action processes are defined in metadata using a set of linked governance action process steps. They are choreographed in a Metadata Access Server running the Governance Server OMAS. When the process is called to run, the Governance Server OMAS navigates to the first governance action process step in the governance action process flow. It creates a matching engine action entity. This is picked up by the engine host and executed in the governance engine just as if it was called independently. The guards are returned by the engine action as it completes. This change is detected by Governance Server OMAS which uses the guards to navigate to the next engine action process step(s) found in the governance action process flow. A engine action is created for each of the next governance action process steps and the cycle is repeated until there are no more governance action process steps in the governance action process flow to execute.

A governance action process can be run many times with different parameters. It can be changed, simply by updating the governance action process step metadata entities in the governance action process definition. New processes can be created by creating the appropriate governance process definition.

If a desired request type can not be supported by the existing governance services, a developer is asked to extend a governance service implementation or create a new one that can be configured into a governance engine to support the desired governance request type.

Governance services supplied with Egeria

Designing your governance processes (30 mins)

A governance action process is a predefined sequence of engine actions that are coordinated by the Governance Server OMAS.

The steps in a governance action process are defined by linked governance action process steps stored in the open metadata ecosystem. Each governance action process step provides the specification of the governance action to run. The links between them show which guards cause the next step to be chosen and hence, the governance action to run.

The governance action process support enables governance professionals to assemble and monitor governance processes without needing to be a Java programmer.

Examples¶

In the two examples below, each of the rounded boxes represent a governance action and the links between them is a possible flow - where the label on the link is the guard that must be provided by the predecessor if the linked governance action is to run.

The governance actions in example 1 are all implemented using governance action services. When these services complete, they supply a completion status. If a service completed successfully, they optionally supply one or more guards and a list of action targets for the subsequent governance action(s) to process.

The first governance action in example 1 is called when a new asset is created. For example the Generic Element Watchdog Governance Action Service could be configured to monitor for new/refresh events for particular types of assets and initiate this governance process then this type of event occurs.

The first governance action to run is Validate Asset. It retrieves the asset and tests that it has the expected classifications assigned. The guards it produces control with actions follow.

Governance actions from the same governance action processes can run in parallel if the predecessor governance actions produces multiple guards.

Example 1: Governance Action Process to validate and augment a newly created asset

Governance action processes can include any type of governance service. Example 2 shows an survey action service amongst the governance action services.

Example 2: Governance Action Process to validate and augment a newly created asset

Capturing lineage for a governance action process¶

The engine actions generated when a governance action process runs provide a complete audit trace of the governance services that ran and their results. The Governance Action Open Lineage Integration Connector is able to monitor the operation of the governance actions and produce OpenLineage events to provide operational lineage for governance action processes. Egeria is also able to capture these events (along with OpenLineage events from other technologies) for later analysis.

Governance Action Process Lifecycle¶

The diagram below shows a governance action process assembly tool taking in information from a governance engine pack to build a governance action process flow. This is shared with the open metadata ecosystem either through direct called to the Governance Server OMAS or via a open metadata archive (possibly the archive that holds the governance engine definition.

Once the definition of the governance action process is available, an instance of the process can be started, either by a watchdog governance action service or through a direct call to the Governance Server OMAS. Whichever mechanism is used, it results in the Governance Server OMAS using the definition to choreograph the creation of engine action entities that drive the execution of the governance services in the Engine Host.

Further information

- The 0462 Governance Action Processes model shows how the governance action process flow is built out of governance action process steps.

- Governance action processes may be created using the Governance Server OMAS API.

- The Open Metadata Engine Services (OMES) provide the mechanisms that support the different types of governance engines. These engines run the governance services that execute the engine actions defined by the governance action process.

Setting up an engine host, governance engines and services (30 mins)

Setting up the governance engine¶

Recap: A governance engine runs in an Engine Host on an OMAG Server Platform.

Like all types of OMAG Servers, the Engine Host is configured through Egeria's Administration Service and the result is a configuration document for the server.

The configuration document is loaded when the Engine Host is started. It contains a list of the governance engines that it are to run in the Engine Host. The configuration document also identifies the metadata access server that Engine Host is paired with, and where the name Governance Engine Definitions will be retrieved from.

For each governance engine name listed in the configuration document, the Engine Host calls its metadata access server to retrieve its Governance Engine Definition. Based on the contents of the Governance Engine Definition, the Engine Host starts up the appropriate Open Metadata Engine Services (OMESs) that hold the logic to run the different types of Governance Services defined in the Governance Engine Definition.

The Governance Engine Definition does not need to be loaded directly into the metadata access server paired with the Engine Host. It just needs to be in one of the Metadata Access Stores connected to the same cohort. A federated query is used to retrieve the Governance Engine Definition. This searches in across all the connected Metadata Access Stores. In fact, different parts of the Governance Engine Definition could be in different Metadata Access Stores. The team that build the Governance Services may publish their Governance Service Definitions to their local metadata store. An architect team responsible for building the Governance Engine Definition may have their own Metadata Access Store that holds the GovernanceEngine entity and the relationships to the GovernanceService entities in the Governance Services team's Metadata Access Store. As long as they are all connected by a cohort, they can all operate as if the whole Governance Engine Definition was in a single Metadata Access Store.

Recap: calls to a governance engine are made by initiating an Engine Action. This can be directly through an API call to the Governance Server OMAS running in a Metadata Access Store, or via a Governance Action Process.

A Governance Action is an entity in open metadata. When it is created in the Metadata Access Store, it sends an event to all connected Engine Hosts. If it is for a governance request type that one of its governance engine's supports, it claims the Governance Action and passes the request to its governance engine to run.

The nature of Egeria's open metadata ecosystem means that a Governance Action can be created in the paired Metadata Access Store ...

... or in a connected Metadata Access Store. Therefore, the Governance Services are available to any member of the open metadata ecosystem. They do not need to know where the Engine Hosts are deployed.

Further information

Using metadata discovery (1 hour)

Metadata discovery and stewardship¶

Metadata discovery is an automated process that extracts metadata about a digital resource. This metadata may be:

- embedded within the asset (for example a digital photograph has embedded metadata), or

- managed by the platform that is hosting the asset (for example, a relational database platform maintains schema information about the data store in its databases), or

- determined by analysing the content of the asset (for example a quality tool may analyse the data content to determine the types and range of values it contains and, maybe from that analysis, determine a quality score for the data).

Some metadata discovery may occur when the digital resource is first catalogued as an asset. Integrated cataloguing typically automates the creation the basic asset entry, its connection and optionally, its schema. This is sometimes called technical metadata.

Cataloguing database with integrated cataloguing

For example, the schema of a database may be catalogued through the Data Manager OMAS API. This schema may have been automatically extracted by an integration connector hosted in Egeria's Database Integrator OMIS.

The survey action services build on this initial cataloguing. They use advanced analysis to inspect the content of a digital resource to derive new insights that can augment or validate their catalog entry.

The results of this analysis is added to a survey report linked off of the asset for the digital resource.

The analysis results documented in the survey report report can either be automatically applied to the asset's catalog entry or it can go through a stewardship process where a subject-matter expert confirms the findings (or not).

Discovery and stewardship are the most advanced form of automation for asset cataloging. Egeria provides the server runtime environment and component framework to allow third parties to create survey action services and governance action implementations. It has only simple implementations of these components, mostly for demonstration purposes. This is an area where vendors and other open source projects are expected to provide additional value.

Survey action services¶

An survey action service is a component that performs analysis of the contents of a digital resource on request. The aim of the survey action service is to enable a detailed picture of the properties of a resource to be built up.

Each time a survey action service runs, it creates a new survey report linked off of the digital resource's Asset metadata element that records the results of the analysis.

Each time an survey action service runs to analyse a digital resource, a new survey report is created and attached to the resource's asset. If the survey action service is run regularly, it is possible to track how the contents are changing over time.

The survey report contains one or more sets of related properties that the survey action service has discovered about the resource, its metadata, structure and/or content. These are stored in a set of annotations linked off of the survey report.

An survey action service is designed to run at regular intervals to gather a detailed perspective on the contents of the digital resource and how they are changing over time. Each time it runs, it is given access to the results of previously run survey-action services, along with a review of these findings made by individuals responsible for the digital resource (such as stewards, owners, custodians).

Operation of an survey action service

- Each time a survey action service runs, Egeria creates a survey report to describe the status and results of the survey action service's execution. The survey action service is passed a survey context that provides access to metadata.

- The survey context is able to supply metadata about the asset and create a connector to the digital resource using the connection information linked to the asset. The survey action service uses the connector to access the digital resource's contents in order to perform the analysis.

- The survey action service creates annotations to record the results of its analysis. It adds them to the survey context which stores them in open metadata attached to the survey report.

- The annotations can be reviewed and commented on through an external stewardship process. This means choices from, for example, a list of potential options proposed by the survey action services, can be verified and the best one selected by an individual expert. The resulting choices are added to annotation reviews attached to the appropriate annotations.

- The next time the survey action service runs, a new survey report is created to link new attachments.

- The survey context provides access to the existing attachments for that asset along with any annotation reviews. The survey action services is able to link its new annotations to the existing annotations as an annotation extension. This means that the stewards can see the history associated with the new information.